October 25, 2021

By Taylor Beck

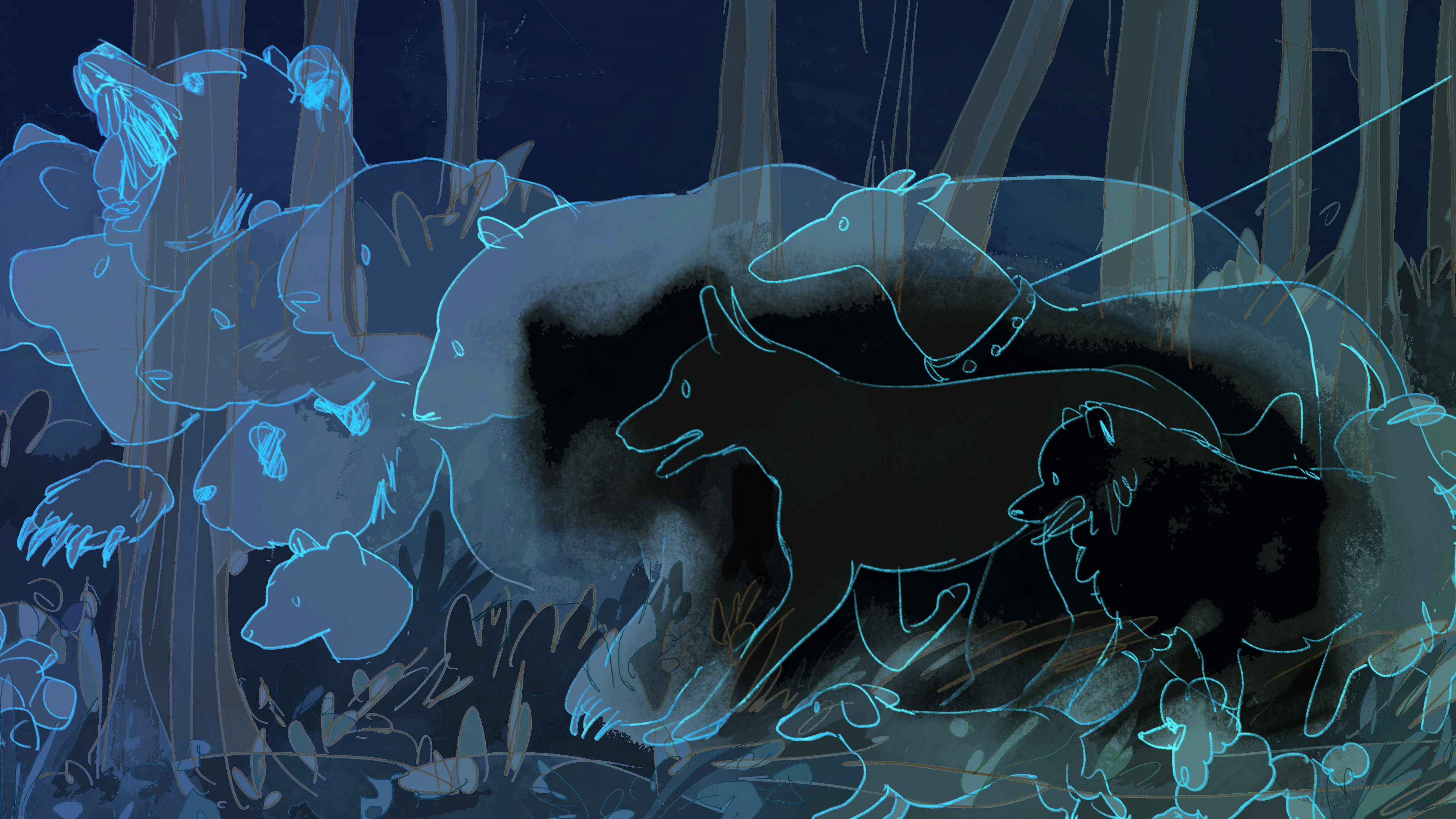

Illustrations by Amey Yun Zhang

A pigeon looks at pictures. The bird knows nothing about heart attacks, nothing about cancer. Yet, trained to classify mammograms and cardiograms, it can outperform doctors.

This ability to sort by sight, without insight, is like the dumb learning done by machines. Online classifiers learn your taste in books, movies, and mates. Similar programs can tell from brain activity what a human or monkey sees- even what he remembers, or dreams. They seem to read minds, and may soon drive cars. Yet their know-how is narrow; they’re like pigeons pecking buttons with mindless competency. As they get smarter, though, these bots are inspiring a new psychology. Just as behaviorists trained pigeons and rats, cognitive scientists are training machines to understand how humans think.

What interests a group of cognitive scientists at Princeton is how we learn the categories that structure our minds. Their goal is to map these conceptual terrains using machines.

What interests a group of cognitive scientists at Princeton is how we learn the categories that structure our minds. Their goal is to map these conceptual terrains using machines.

“This is exciting because reasoning about humans in the real world is what motivates us as psychologists,” says Ruairidh Battleday, a graduate student in the department of Computer Science at Princeton. Working with Professor of Psychology and Computer Science Tom Griffiths and postdoctoral researcher Josh Peterson, Battleday has co-authored a series of ambitious papers, proposing a new way to study an ancient riddle.

Philosophers have puzzled over categories since antiquity. Plato proposed ideal types of real-life objects and Linnaeus applied nested categories to classify living species. At the bottom, such concepts are what our minds are made of, the format that structures our thoughts. Yet the classifying we do effortlessly when we see is a challenge to machines. What interests the team at Princeton is how we humans learn the categories that organize our minds.

Years ago, at UC Berkeley, Griffiths learned of a set of images: birds and planes, classified by people (“Is it a bird, is it a plane?”) to train machines. “They had gathered more behavioral data on how people use categories than the entire history of cognitive psychology,” Griffiths says. “We thought, clearly this is a thing that’s possible to do -- to collect rich data sets at this scale.” In a future of self-driving cars and human-free factories, machines will need to discriminate object types quickly. What interested the team, though, was not designing a “perfect” image classifier, like the engineers. They wanted to find a more fallible AI: a machine that “sees like a human.” If a machine makes the same errors we do, if it confuses, say, a blurry deer for a horse-- it could teach us how humans see. Their idea was to train computers not on the “ground truth”-- if that thing in the sky is really a bird, a plane, or Superman -- but on human uncertainty.

The project grew to encompass not two categories, but ten. The team started with a set of images used in machine vision, called CIFAR10. They collected 500,000 judgments of 10,000 images-- data at a scale unheard of in traditional psychology labs. The lab’s 2019 paper with assistant professor of computer science Olga Russakovsky showed that machines trained on human confidence-- 70% deer versus 95% deer; 50/50 airplane or bird-- learned to classify more the way that humans do. These “human-like” classifiers could handle tiny changes to images that stump those trained on ground-truth alone; a stop sign with a mark on it, for example, can look like a speed limit sign to an algorithm reliant on “cheats” based on superficial features. The “human-confidence” classifier mapped the relationships between categories better than traditional machine-vision algorithms, too: dogs more similar to cats than to cars.

The project grew to encompass not two categories, but ten. The team started with a set of images used in machine vision, called CIFAR10. They collected 500,000 judgments of 10,000 images-- data at a scale unheard of in traditional psychology labs. The lab’s 2019 paper with assistant professor of computer science Olga Russakovsky showed that machines trained on human confidence-- 70% deer versus 95% deer; 50/50 airplane or bird-- learned to classify more the way that humans do. These “human-like” classifiers could handle tiny changes to images that stump those trained on ground-truth alone; a stop sign with a mark on it, for example, can look like a speed limit sign to an algorithm reliant on “cheats” based on superficial features. The “human-confidence” classifier mapped the relationships between categories better than traditional machine-vision algorithms, too: dogs more similar to cats than to cars.

The algorithms, called convolutional neural networks, or CNNs, are like “a playground” for psychologists, says Peterson, who co-authored the study. “You have these networks, and there are different knobs you can turn: you can think of any number of hypotheses.” In their case, the hypothesis was that a machine that acts like a person should make the errors a human would. “It’s kind of counter-intuitive,” Peterson adds. “A perfect model-- perfect in the sense that it’s doing the best it can, given the data we’ve given it-- should be confused, because the world is confusing, intrinsically.” Yet engineers of machine vision tend to treat errors as random noise-- not a pattern to be optimized for a more human-like intelligence.

The next step was to peer under the hood of the algorithms to see how they learned. The team did this by pitting four algorithms against one another-- each tuned to a different “setting” for learning. If one algorithm classified natural pictures more the way humans did, it would be considered the “winner”: the most human bot. The team would take note of the knob settings. What representations was the algorithm using, in its hidden layers of structure, to see as we do?

In 2020, the team published a paper in Nature Communications unveiling what they’d found. The paper’s first reference was not an AI pioneer, not Google DeepMind, but Aristotle: Categories, published around 350 B.C.E.. The learning strategies cognitive scientists call “prototype” and “exemplar,” used in this study, echo ancient debates. Is there an ideal Platonic “prototype” for each category, to which each new thing we see is compared? Or are we creatures of flux, as Aristotle had it, our categories continually updated with data?

The artificial minds designed for the study suggested answers to how we represent the concepts we learn-- how we derive concepts from a world of specifics. What mattered most, surprisingly, was not if the machine thought like Plato or Aristotle, in prototypes or exemplars. Structure was the key to categories: if an algorithm’s models reflected similarity the way humans do — dogs closer to cats; planes to trucks – then it performed more like a person, regardless of the recipe driving its learning. A pigeon may peck at the right picture of a tumor, but not represent “cancer” near “heart attack” in the category of deadly disease. Similarly, smarter machines may only emerge when they learn to cluster the visual world as people do.

The artificial minds designed for the study suggested answers to how we represent the concepts we learn-- how we derive concepts from a world of specifics. What mattered most, surprisingly, was not if the machine thought like Plato or Aristotle, in prototypes or exemplars. Structure was the key to categories: if an algorithm’s models reflected similarity the way humans do — dogs closer to cats; planes to trucks – then it performed more like a person, regardless of the recipe driving its learning. A pigeon may peck at the right picture of a tumor, but not represent “cancer” near “heart attack” in the category of deadly disease. Similarly, smarter machines may only emerge when they learn to cluster the visual world as people do.

“I remember surfing with some people a few months ago,” Battleday recalls. “They knew exactly which waves to take. I was there trying to analyze, ‘what would be a good one?’, and I kept getting it wrong.” The surfers navigated the waves, he thinks, because they’d learned a new structure for “what makes a good wave”: a shape of category that doesn’t exist in the amateur’s mind. Battleday struggled to surf, like a pigeon untrained, blinded by the wrong categories.