This article is from the Robotics: Safe, resilient, scalable, and in service of humanity issue of EQuad News magazine.

By John Sullivan, Office of Engineering Communications

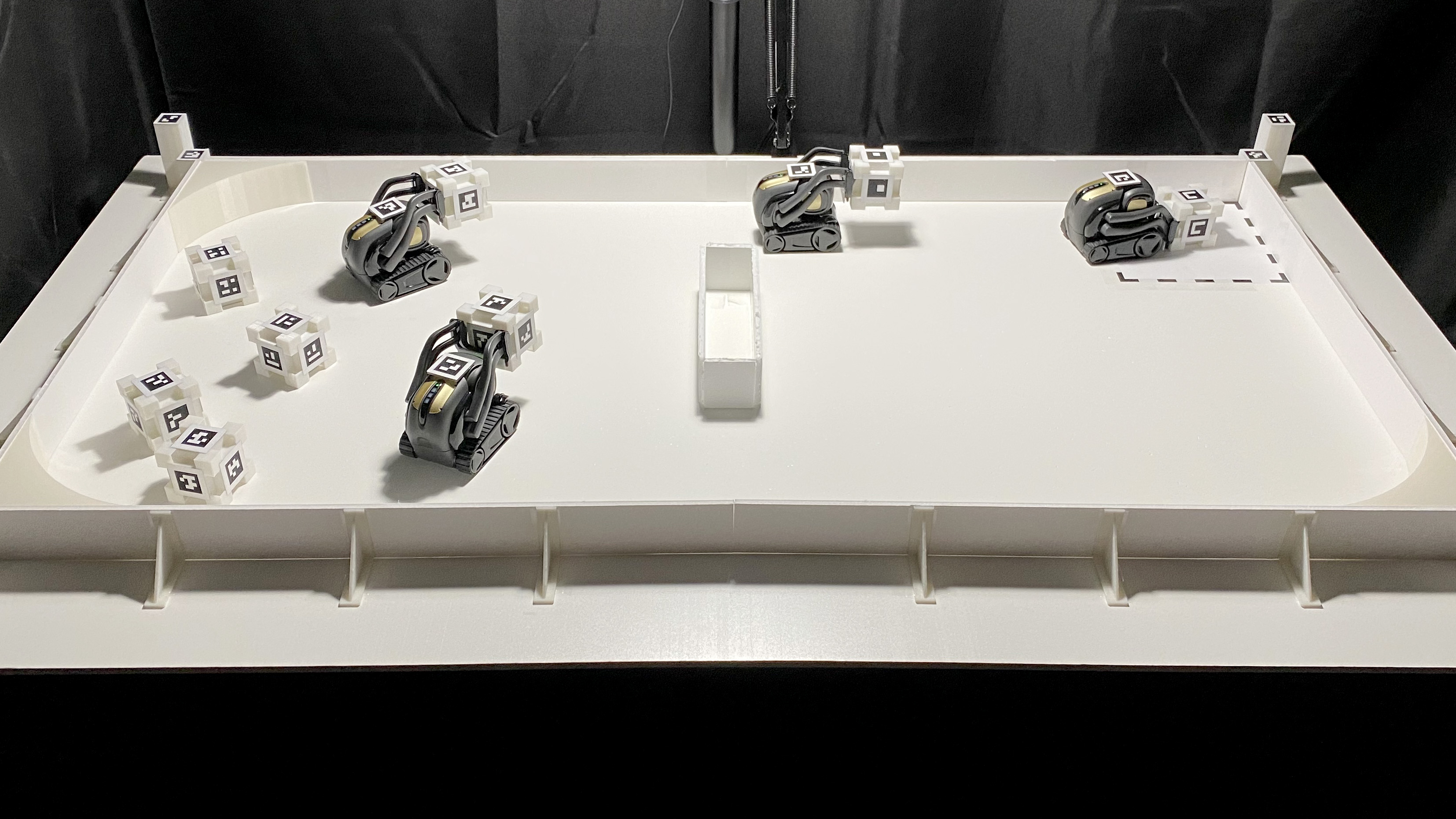

In Jimmy Wu’s apartment, a scrum of mini robots bump, swerve, and zip chaotically across a tabletop. It looks like an aggressive bumper car rally, but within a few minutes, order emerges.

The swarm coalesces as the robots race in formation to scoop up bits of trash and deposit them in a goal marker.

The amazing thing: The robots are teaching themselves.

“We’re trying to tell the robots ‘Look, you’re going to get a reward every time you successfully put a piece of trash into the wastebasket,’ and that’s all they know,” said Szymon Rusinkiewicz, the David M. Siegel ’83 Professor of Computer Science. “We have algorithms where if they do this thousands and thousands of times in simulation, eventually they learn what it is that causes them to get rewards.”

Wu is a graduate student on Rusinkiewicz’s research team, which is working to apply a technique called reinforcement learning to robotics. The method, familiar to dog trainers everywhere, offers rewards for good performance. In the case of robots, the rewards are mathematical, like points in a video game. The basic algorithms guiding the robots’ behavior are adaptable and change with the rewards, so the robots can develop their own methods for solving problems based on millions of computer simulations.

Rusinkiewicz said the long-term goal will involve cooperation from many different Princeton labs working on projects such as sensor arrays, safety protocols, and group dynamics.

“The work dovetails very nicely with research that’s going on by other people in robotics,” he said.

In a recent project, the researchers assigned action figure-sized robots the task of picking up small plastic blocks labeled trash and moving them into a goal. At the start, all the robots were equipped with tiny bulldozers, but as the experiment progressed, the bots used different techniques. Rusinkiewicz said the robots learned to work together in surprising ways.

“The throwing agent throws stuff in the general direction of the goal, and another agent hangs out near the goal, picks it up and drops it in,” he said. “The exciting thing is, we are giving these agents the same setup, the same reward, but they learn to exploit their own strengths and they learn to cooperate. We are very interested in how far we can develop this idea. Can we get agents that learn to collaborate, to have even more specialized ideas without telling them what to do?”