September 10, 2015

Researchers have found an automated way to identify and eliminate those stray soda cans, roaming cars and photobombing strangers that can send favorite photos to the recycle bin.

Called distractors, they are elements that take away from the central focus of the photo.

"Clutter," said Ohad Fried, a graduate student in computer science at Princeton University and the lead author of the paper on this research. "These are things in the photo that, if they were removed, would make the photo better."

In an article delivered at the 2015 Computer Vision and Pattern Recognition Conference in Boston this summer, a team from Princeton and the software company Adobe presented an early version of a one-click system to remove distracting elements from photos. Currently, Fried said, many programs such as Photoshop allow users the ability to manually define and remove distracting elements. But that typically takes time and requires a degree of skill that casual users often do not have.

"For most casual photographers, this effort is neither possible nor warranted," the researchers wrote. "Last year Facebook reported that people were uploading photos at an average rate of 4,000 images per second. The overwhelming majority of these pictures are casual — they effectively chronicle a moment without much work on the part of the photographer."

The researchers distinguished distractors from more general flaws in photos such as tone or lighting. They noted that photo editing programs already handle global problems quite well, but identifying distractors is more difficult because it is a question of content selection rather than changing a universal aspect of a photo such as color saturation.

"I find this work intriguing because detecting distracting elements in an image is in fact a semantic analysis, a high-level analysis of a scene," said Daniel Cohen-Or, a professor of computer science at Tel Aviv University who was not involved in the research.

To identify elements that people find distracting in photos, the researchers used two data sets of images. They then turned to Amazon's Mechanical Turk system (which enlists large numbers of people to perform set tasks such as reviewing photographs) to go through the first data set of 1,073 images, and identify distracting elements.

"The nice part about this set is that we have multiple entries per image," said Fried, who works in the lab of Adam Finkelstein, a professor of computer science at Princeton and a co-author of the paper. "The problem is that these are people who don't necessarily care about the images."

For the second set — over 5,000 images — the researchers used an iPhone app called Fixel to note changes to images that photographers had retouched and exported, shared or saved. (Fixel, an experimental app developed at Adobe, asked users to check a box to allow photos to be used for research; the researchers said about 25 percent of users did so.) The set was the mirror of the first — images had only one entry, but the users cared enough about the shots to take the time to edit them.

The researchers used the data to create detectors for a wide range of distracting elements.

"We have a specific car detector in the code because people often want to eliminate cars that wander into the frame," Fried said. "We have a face detector. If the face is large and in the center of the photo, we probably don't want to remove it. But if it is coming in from the side, it might be a photobomb."

Besides creating task-specific detectors, the researchers also created a weighting system that assigned values for different arrangements of colors and shapes in photos. They then created programs to train the software to identify and eliminate elements with the characteristics of distractors.

The researchers said the results were promising but definitely left room for improvement. Many elements in the testing dataset were successfully removed. But in some cases critical elements of the photo were identified as distractions. In others, particularly with more complex photo elements, the distractors were correctly identified but the program was unable to properly fill the removed area to match the photo. The researchers said each of the failures suggested new methods for improvement.

"In the future, it might become accurate enough to be used in a photo editing product," Fried said. "Right now, it is a very interesting research question."

In addition to Fried and Finkelstein, the paper's authors include Eli Shechtman and Dan Goldman of Adobe Research.

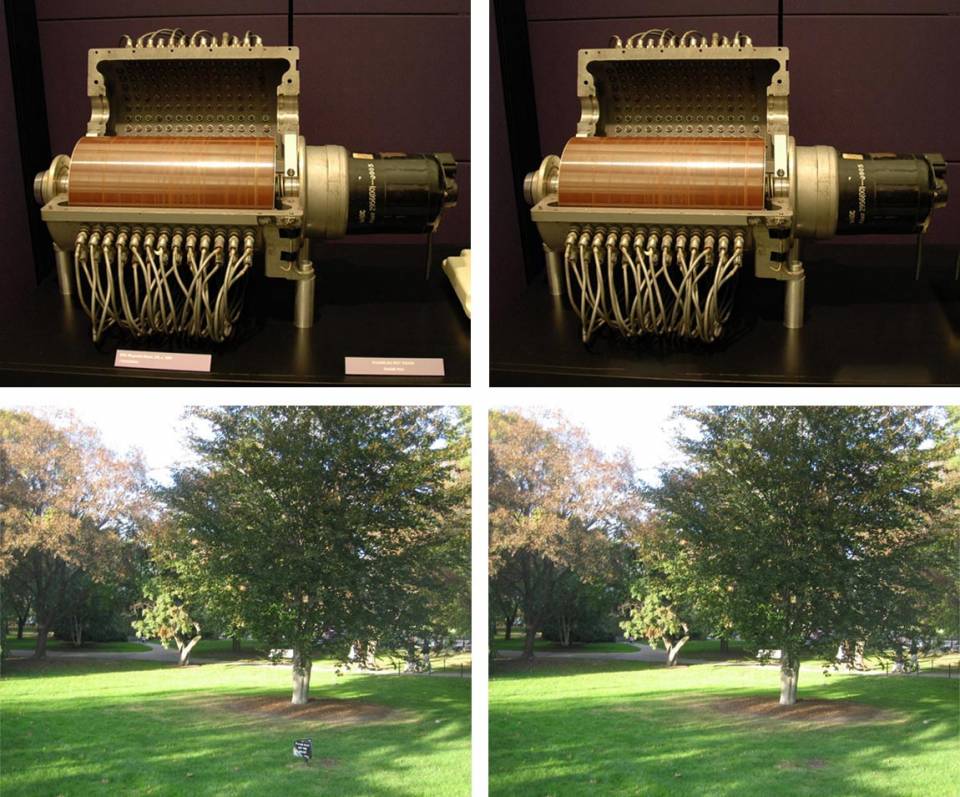

Researchers including members of the Princeton computer science department have developed a program that automatically removes distracting elements from photographs. Although they said the technique needs further work before it could be ready for general use, the researchers demonstrated its use by removing distracting elements from the photos shown here. (Photos courtesy of Ohad Fried and Adam Finkelstein, Department of Computer Science, Princeton University; and Eli Shechtman and Dan Goldman, Adobe Research)