December 22, 2020

By Steven Schultz, Office of Engineering Communications

A team of Princeton University researchers was a top finisher in the international 2020 Bell Labs Prize competition, honored for developing a method that may allow computers to learn from data without compromising the privacy of people who furnished the data.

Products such as self-driving cars and sensitive services such as healthcare systems increasingly use a type of artificial intelligence called deep learning to improve performance and outcomes on the fly, but this constant processing of data also opens significant threats to privacy for the sources of the data. Nokia, the technology company that owns the historic Bell Labs research organization, awarded the Princeton team second place in its annual competition for "InstaHide," a proposal for overcoming what seemed like a fundamental tradeoff between privacy and learning.

The competition, which recognizes "disruptive innovations that will define the next industrial revolution," drew 208 proposals from 26 countries. The second-place prize carries an award of $50,000. The Princeton team includes Sanjeev Arora, the Charles C. Fitzmorris Professor in Computer Science; Kai Li, the Paul M. Wythes '55 P86 and Marcia R. Wythes P86 Professor in Computer Science; Yangsibo Huang, a graduate student in electrical engineering; and Zhao Song, a postdoctoral fellow in computer science.

"Running machine learning on data while maintaining privacy is a challenging and critical topic of research with important implications for the use of data science," said Andrea Goldsmith, dean of Princeton's School of Engineering and Applied Science. "I’m thrilled our Princeton team has been awarded second place in the Bell Labs Prize competition to advance knowledge in this important area."

Devices and distributed systems increasingly rely on deep learning – for example, a smart speaker that learns the voice of its owner or a network of hospitals that seeks patterns in patient data that might improve treatment decisions. Often these systems transmit the data to powerful computers that run the learning algorithms far from where the data originated. Existing ideas for encrypting data either do not allow deep learning to be done on the data, or lead to extreme slowdown of training.

"It's been a challenge for the community to see whether there are any other practical approaches," said Li.

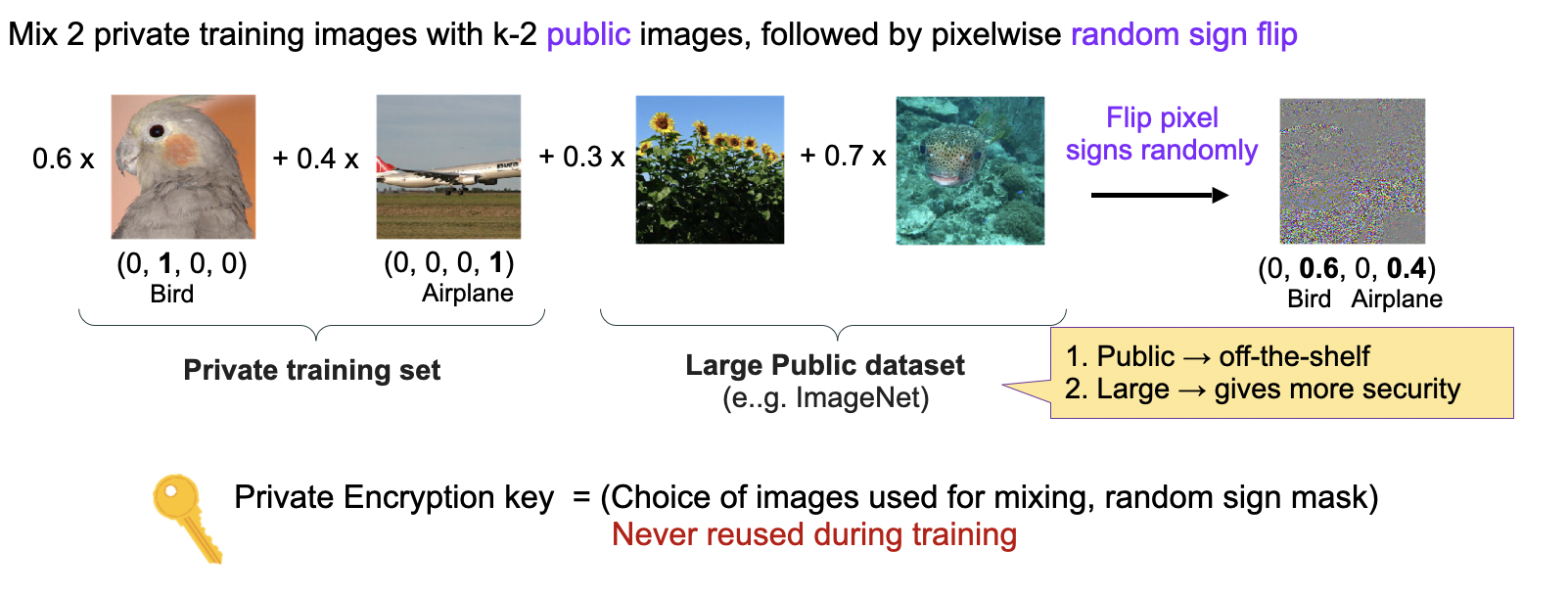

InstaHide, which is designed specifically for deep learning involving images, avoids this challenge by applying what the researchers described as "light encryption," not on the raw data but on the results of an initial step in the deep learning process.

"After our encryption, you can still run learning algorithms almost as though you were feeding the data directly," said Huang.

The team presented its results at the International Conference on Machine Learning in July. A similar approach could be applied to deep learning on text instead of images, said Arora. The same team, along with Assistant Professor of Computer Science Danqi Chen, an expert in the field of natural language processing and director of the Princeton NLP Group, recently reported their development of TextHide to demonstrate such an approach.

"This is probably a first-cut solution," Arora said, noting that it's important for the broader research community to examine and test the effectiveness of the privacy protections and discover other such solutions.

The researchers noted that the technology might not end up being completely secure against all privacy attacks, but may create a high enough barrier to make attacks impractical. "A secure system is not something that you can break or not break," said Song. "It's more like, 'How quickly can you break the system and what are the results of breaking the system?' We are still trying to understand the precise security level of our system, but one thing we can know is that clearly this is easier to integrate into machine learning than trying to use classical cryptography."

A figure from the paper describes how InstaHide combines images and flips pixels in a light encryption process that allows computers to carry out deep learning algorithms on images without revealing sensitive information about the contents of the images.

A figure from the paper describes how InstaHide combines images and flips pixels in a light encryption process that allows computers to carry out deep learning algorithms on images without revealing sensitive information about the contents of the images.

The researchers said that in addition to developing a novel result, a satisfying part of the work was the collaboration itself, which resulted from a series of chance conversations that brought together disparate expertise.

Huang, who had been accepted into the Ph.D. program in electrical engineering, was trying to decide whether to choose Princeton, when she met Li during a campus visit in the spring of 2019. Li, who has broad expertise in building distributed and parallel computing systems, asked her about her interest in using machine learning to improve clinical decisions in healthcare and noted that hospitals face problems when they want to share and learn from data but must strictly preserve the privacy of patients.

"So that was the very beginning of the project," she said. Because Princeton encourages cross-disciplinary work, it was easy for Li, a computer scientist, to serve as Huang's advisor when she joined the electrical engineering department. The collaboration grew as the two developed ideas for preserving privacy in machine learning and wanted to quantify the level of security that their ideas might provide. In a hallway of the computer science building, Huang met Song, whose research is in the underlying mathematics and theory of machine learning. He was doing a post-doctoral fellowship at the Institute for Advanced Study in Princeton, where he had worked with Arora, and was joining Princeton University for another post-doctoral position.

"The idea we worked on together was an interesting project but we realized it might not have that much impact," Huang said. Song and Li suggested bringing in Arora whose work also aims for a formal mathematical understanding of machine learning and deep learning. "Then, together, we came up with InstaHide," she said. "Instahide is amazing teamwork. We can see how the two disciplines of theory and practical systems interact with each other. When you put them together you get something amazing."

For Song, the collaboration also was transformative. After doing his Ph.D. in theoretical computer science and machine learning, working with Li "completely changed my mind on research," he said. Now, as he seeks faculty positions, he is looking for opportunities to continue integrating his mathematical background with building practical, privacy-preserving systems for machine learning. "I feel this is more useful for the world and for our lives in the future," Song said.

In the meantime, the group continues to collaborate and refine their ideas. "It is a new way for people to think about how to solve this problem," Li said. "And whenever you have a new approach it will take some time for the community to further develop the approach before it becomes a practical technology that everyone accepts."