November 17, 2014

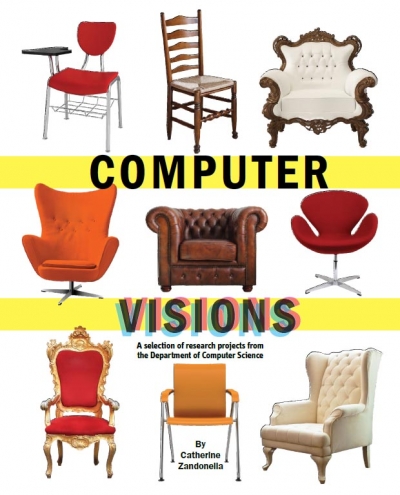

JIANXIONG XIAO TYPES “CHAIR” INTO GOOGLE’S search engine and watches as hundreds of images populate his screen. He isn’t shopping — he is using the images to teach his computer what a chair looks like.

JIANXIONG XIAO TYPES “CHAIR” INTO GOOGLE’S search engine and watches as hundreds of images populate his screen. He isn’t shopping — he is using the images to teach his computer what a chair looks like.

This is much harder than it sounds. Although computers have come a long way toward being able to recognize human faces in photos, they don’t do so well at understanding the objects in everyday 3-D scenes. For example when Xiao, an assistant professor of computer science at Princeton, tested a “state-of-the-art” object recognition system on a fairly average-looking chair, the system identified the chair as a Schipperke dog.

The problem is that our world is filled with stuff that computers find distracting. Tables are cluttered with the day’s mail, chairs are piled with backpacks and draped with jackets, and objects are swathed in shadows. The human brain can filter out these objects but computers falter when they encounter shadows, clutter and occlusion by other objects. Improving software for object recognition has many benefits, from better ways to analyze security-camera images to computer vision systems for robots. “We start with chairs because they are the most common indoor objects,” Xiao said, “but of course our goal is to dissect complex scenes.”

Xiao has developed an approach to teaching computers to recognize objects that he likes to call a “big 3-D data” approach because he feeds a large number of examples into the computer to teach it what an object — in this case a chair — looks like.

The chairs that he uses as training data are not pictures of chairs, nor are they the real thing. They are three-dimensional models of chairs, created with computer graphics (CG) techniques, that are available from 3D Warehouse, a free service that allows users to search and share 3-D models. With help from graduate student Shuran Song, Xiao scans the 3-D models with a virtual camera and depth sensor that maps the distance to each point on the chair, creating a depth map for each object. These depth maps are then converted into a collection of points, or a “point cloud,” that the computer can process and use to learn the shapes of chairs.

The advantage of using CG chairs rather than the real thing, Xiao said, is that the researchers can rapidly record the shape of each chair from hundreds of different viewing angles, creating a comprehensive database about what makes a chair a chair. They can also do hundreds of chairs of various shapes — including office chairs, sofa chairs, kitchen chairs and the like. “Because it is a CG chair and not a real object, we can put the sensor wherever we need,” Xiao said.

For the technique to work, the researchers also must help the computer learn what a chair is not like. For this, the researchers use a 3-D depth sensor, like the one found in the Microsoft Kinect sensor for the Xbox 360 video game console, to capture depth information from real-world, non-chair objects such as toilets, tables and washbasins.

Like a child learning to do arithmetic by making a guess and then checking his or her answers, the computer studies examples of chairs and non-chairs to learn the key differences between them. “The computer can then use this knowledge to tell not only whether an object is a chair but also what type of chair it is,” Song said.

Once this repository of chair knowledge is built, the researchers put it into use to search for chairs in everyday scenes. To improve the accuracy of the scanning, the researchers built a virtual “sliding shapes detector” to skim slowly over the scenes, like a magnifying glass skimming over a picture, only in three dimensions, looking for structures that the computer has learned are associated with different types of chairs.

Because the computer did its training on such a large and comprehensive database of examples, the program can detect chairs that are partially blocked by tables and other objects. The researchers can also spot chairs that are cluttered with stuff because the computer program can subtract away the clutter. The detection of objects using depth information rather than color, which is what determines shapes in most photos, allows the computer to ignore the effect of shadows on the objects.

In tests, the new method significantly outperformed the state-of-the-art systems on images, Xiao said. The same technology can also be generalized to other object categories, such as beds, tables and sofas. The researchers presented the work, titled “Sliding shapes for 3D object detection in RGB-D images,” at the 2014 European Conference on Computer Vision.

-By Catherine Zandonella